Video abstraction and anomalies detection for stationary cameras

Jun 25th, 2010 | By Dr. Paolo Buono | Category: Featured Articles, NewsIn recent years, the field of video analysis and video surveillance has become even more important due to the wide popular attention paid to world events, which has exposed the shortcomings of the current state of technology. Video-based analysis focuses either on real-time threat detection or on recording video for subsequent forensic investigations.

A well defined network of surveillance cameras is often present in cities, aimed at maintaining a comprehensive coverage of specific locations such as a building or other sensitive areas. The main goal of these systems is to assist people to detect and identify potential threats or suspicious events arising during the recorded timeframe of the video.

It has been demonstrated that the human attention span can drop below acceptable levels very quickly (after only 20 minutes), even in trained observers [2]. As a consequence, video monitoring can be very ineffective. Contributions from realtime algorithms, dealing with object identification, detection and tracking, are therefore needed to alert surveillance staff when suspicious events occur.

Current technology does not allow a purely automatic video interpretation that can provide answers to a wide set of possible questions. Some events may be highly unpredictable. For effective video analysis it is important to combine the perception, flexibility, creativity and general knowledge of the human mind with the enormous storage capacity and computational power of computers. This activity is often very time-consuming, and the whole process needs to be speedier so that the analyst can focus her/his efforts on the relevant parts of the video without wasting time on meaningless segments. Visual analytics can be very helpful in this field.

A comprehensive review of the state of the art in automated video surveillance technologies can be found in [8]. The analysis is performed with reference to several aspects: image processing, surveillance systems and system design. One of the most prominent of these is the issue of computer vision algorithms. Another issue is related to the possible ways of integrating these different algorithms and approaches to build a complete surveillance system. The last issues concerns large scale surveillance systems, such as those present in public transportation systems (subways, airports, etc.) or large buildings where there is a high concentration of people in small spaces.

User interfaces allowing users to operate these detection systems also play a key role. From this point of view, a “surveillance index browser”, part of a larger architecture that also comprises a face-recognizer module, is presented in [3]. The user interface shows, on a timeline, an overview of all the events detected by the system in a particular time-frame. A second timeline provides a zoomed version of any video segment chosen by the user. A window displays the output of the tracker camera, while a second window displays a zoomed-in video feed of the moving object.

An interesting work can be found in [4]. The tool performs a good tradeoff between the computer resources for searching video data and the user, that focus the attention on interpreting the results in the hope of gaining insights. The application supports a number of search parameters such as color, shape, texture and object detection. Once a search is started, the user may continue her/his analysis of the video. An advantage of this system is that partial results are shown as soon as they are acquired. If one of these matches shows promising results, the user may also start concurrent searches while waiting for others to be completed, or stop unpromising leads altogether.

DOTS [1] is an indoor multi-camera surveillance system for use in an office setting. The user interface displays an overview of the viewable cameras through a series of small thumbnails. With these cameras a person can be tracked and his/her position estimated by mapping the person’s foreground shapes on a 3D model of the office. In this process, the changing position of a person can be tracked across multiple cameras. A floor plan displays the current position of the employees in a given instant. The currently tracked person is shown in a bigger preview area in the interface, complete with the person’s detected face. This system’s main advantage is its employment in a known and stationary setting, although this can also be seen as the main disadvantage.

Vural et al. [9] uses eye-gaze analysis [6] to extrapolate, by means of video abstraction techniques, a processed video which is an integration of the video parts showing those actions the operator is focusing on or overlooking. In this way, relevant parts of the video can be efficiently and rapidly reviewed, without having to go through the whole video.

A similar approach to our own is used in [7] where ”slit-tear visualizations” are drawn on the source video. For every frame the system writes on a timeline the pixels beneath these lines. For example, if such a line is drawn on the side of a road, crossing the resulting timeline the shapes of the car passing along it will be shown. These shapes will be more or less elongated depending on the speed. If nothing is happening, the line will keep writing the same pixels. So any event that happens over that line will be easily identifiable because the background pixels, being static, will be uniform, so foreground objects will stand out.

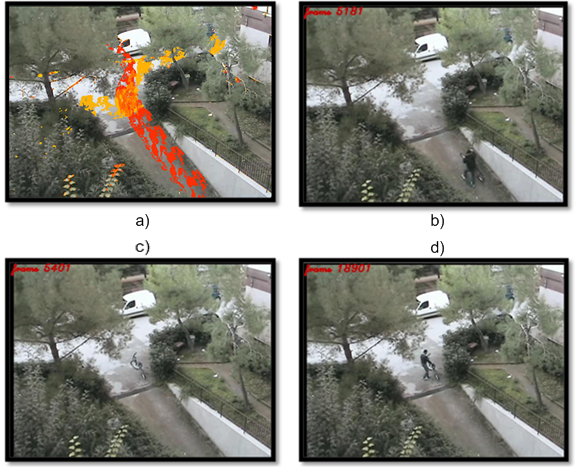

Another interesting approach can be found in [5], which relies on the computation of an image which summarizes the activity happening in a scene. The process displays traces of movements across the scene. The tool is intended to be used with stationary cameras and is useful when it is known what happened, but the precise details about the how and when are unknown (i.e. a bicycle is stolen but it is not known when and from who) . The image uses a color code scale ranging from red to yellow to show how old the events are. The human investigator can browse and review the segments of video which depict the events of interest by clicking on areas of interest.

An example of an image produced by the technique is shown in Figure 1 (a), in which all the movements that occurred in that given scene are depicted with a red-yellow map. This technique has been employed in several scenarios. one of them is about a video camera positioned from above on a small crossroad. In Figure 1, a cyclist passes by (b) and leaves his bike on a nearby wall (c). After some time another person enters the area, steals the bike (c) and rides away. The resulting abstract image (a) clearly shows two differently colored movement traces: the red trace is generated by the movement of the cyclist who leaves the bike, while the yellow trace is generated by the person who steals the bike

With this tool, the human investigator can draw a rectangular-shaped selection area over the image at the point where the two traces cross (where the bike was left); at this stage a segment with all the frames inside the defined area where movement was registered will start playing. In this example, after clicking, the investigator will be shown the part of the video where the cyclist leaves the bike and then another part where the thief steals the bike and rides away.

One of the advantages of this technique is its general purpose applicability: in fact, it has been tested and proved effective in outdoor and indoor settings; the users are able to reconstruct the story in a very short time, rapidly pinpointing the object of the search, even if this was not known beforehand.

References:

[1] Girgensohn, A., Kimber, D., Vaughan, J., Yang, T., Shipman, F., Turner, T., Rieffel, E., Wilcox, L., Chen, L. and Dunnigan, T. 2007. DOTS: support for effective video surveillance. In Proceedings of the 15th International conference on Multimedia (Augsburg, Germany, September 24 – 27, 2007). Multimedia ’07. ACM Press, New York, NY, USA, 423–432

[2] Green, M. W. 1999. The appropriate and effective use of security technologies in U.S. schools. A guide for schools and law enforcement agencies. Technical report, Sandia National Labs, Albuquerque, NM, USA.

[3] Hampapur, A., Brown, L., Connell, J., Ekin, A., Haas, N., Lu, M., Merkl, H. and Pankanti, S. 2005. Smart video surveillance: exploring the concept of multiscale spatiotemporal tracking. IEEE Signal Proc. Mag. 22,2 (March 2005), 38–51.

[4] Huston, R., Sukthankar, J., Campbell, and Pillai, P. 2004. Forensic video reconstruction. In Proceedings of the ACM 2nd International workshop on Video surveillance & sensor networks (New York, NY, USA, October 15, 2004). VSSN ’04. ACM Press, New York, NY, USA, 20-28.

[5] Buono, P., Simeone, A., Video abstraction and detection of anomalies by tracking movements, In: Proc. AVI 2010. Rome, Italy, May, 26-28, 2010, NEW YORK: ACM, ISBN/ISSN: 978-1-4503-0076-6, 249-252.

[6] Pritch, Y., Rav-Acha, A., Gutman, A. and Peleg, S. 2007. Webcam synopsis: Peeking around the world. In Proceedings of the IEEE 11th International Conference on Computer Vision (Rio de Janeiro, Brazil, October 14 – 20, 2007). ICCV 2007. IEEE Computer Society, Los Alamitos, CA, USA, 1-8.

[7] Tang, A., Greenberg, S. and Fels, S. 2008. Exploring video streams using slit-tear visualizations. In Proceedings of the working conference on Advanced Visual Interfaces (Napoli, Italy, May 28 – 30, 2008). AVI ’08. ACM Press, New York, NY, USA, 191–198.

[8] Valera, M. and Velastin, S. 2005. Intelligent distributed surveillance systems: a review. IEE Proc., Vis. Image Signal Process. 152,2 (April 2005), 192–204.

[9] Vural, U. and Akgul, Y. 2009. Eye-gaze based real-time surveillance video synopsis. Pattern Recognition Letters, 30,12 (September 2009), 1151–1159.